AWS Lambda, Kinesis and Java : Streams (Part one of a three part blog.)

Previous post deals with AWS lambda and java. Before we go to the hands on of lambda part I would like to introduce Kinesis. The reason being Lambda is almost always used in conjunction with another services. And the other service in our case will be Kinesis. Because Kinesis is a bigger topic we will be covering this in in 3 parts. Links to subsequent blogs

- A kinesis stream : Kinesis Fundamentals and the design we will be following in the post

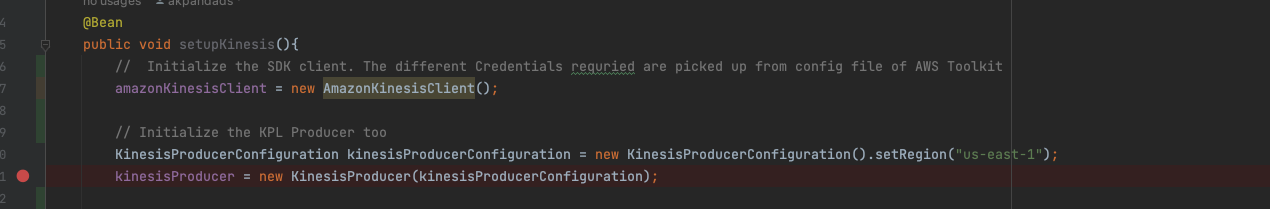

- Kinesis Producer : creating a stream and publishing into the stream using both SDK API and KPL and also the kinesis agent.

- A kinesis consumer : A lambda function which runs whenever a message is published into the stream

Kinesis streams:

A managed message streaming service provided by AWS, comparable to Apache Kafka with subtle differences. It comes in variants of provisioned and on-demend variants. Provisioned service is selected when we know and we can control the amount of data coming in per second while on-demand is more elastic in nature, which scales up and down as per demand. And of course it is more expensive.

A kinesis stream at the very basic level consists of one or more shards. A shard is the physical location where the messages are actually written. Each shard has some limitations and rules to be followed based on the type of Streaming service used.

All the data that is written into kinesis or read from kinesis needs to be in Bytes.

|

| Kinesis Provisioned vs On Demand |

Shards in Provisioned service :

The AWS admin or architect needs to know the quantity of inflow of data and outflow of data and accordingly decide how man shards are required for an application. Although shards can be merged and split but those are very time consuming operations.

The rate of writing data is limited to 1 Mbps or 1000 messages per second per shard.

The rate of reading data is a combined of 2 Mbps or 2000 messages per second per shard for all consumers reading from one shard. This indicates that having more consumers per shard will decrease the throughput.

|

| Shards Provisioned Mode |

Shards in on-demand mode :

When there is no estimated capacity or there are bursts of data coming and we do not want delay in processing then the on demand mode creates and deletes shards dynamically. It has capacity of 4 Mbps write and 8 Mbps of reading which can scale it upto 200 MBps and 400 Mbps respectively.

|

| SDK API vs KPL |

Kinesis Stream Consumers:

The consumers are the applications which read data from the streams. Any application can read but they need to use the Amazon SDK APIs or KCL (Kinesis Consumer Library). If using SDK a simple get records by specifying the shard id and record count is sufficient.

But using KCL is a little more complicated. One more thing to note is KCL can be used only if the producer has used KPL because it deaggregates the data that was aggregated by KPL.

KCL uses dynamoDB internally to maintain track of the messages read, so one has to carefully configure the Write and Read capacities of Dynamodb while using KCL.

Resharding in streams:

Any shard becomes a hot shard if too many messages end up in that. Given that there are limits to how much can be written into or read from a shard, having more messages will result in lesser throughput eventually leading to loosing messages or system going into an exception.

It is also possible in the reverse way when a shard is having very less messages being written which will result in underutilising the shard capacity while paying for the full capacity. In this case it becomes a cold shard.

Amazon recognises these problems and provides the option of splitting or merging shards based on usage. This is available only in Provisioned mode as on-demand mode already takes care of it.

Splitting:

When a shard becomes hot, then the shard can be split into two new shards. This will result in 3 shards, 1 parent and 2 child shards. All new messages will end up in the child shards and the older shard will be deleted once all the messages are read or have expired. This increases throughput.

|

| Splitting Hot shards |

Merging:

When underutilising one or more shards then the shards can be emerged together. All the messages will be combined into the new shard.

Whenever resharding is done there is an issues of reading records in out of order fashion. This scenario needs to be handled by consumers, making sure that the child shard record processing is not started till all the parent shard messages are processed.

|

| Merging cold shards |

Resharding cannot be done in parallel and are time consuming operations. Which means only one shard can be split or merge at a time, Kinesis streams in provisioned types are not scalable natively. This needs to be done manually

Given that we had higher overview of Kinesis streams, in our next blog we will be getting our hands dirty and analysing the SDK and API producers.

Comments

Post a Comment